Conversions without cookies

How to track conversions from Google Ads and Meta without any cookies

Since this time of the year is considered the post-(Christmas)-cookies-time in my household, allow me to discuss a concept I believe is not very widely used, but potentially solves a couple of MarTech issues i.m.h.o…

Pretext

Usually when you do advertisement on Google Ads or Meta (or TikTok or LinkedIn or wherever… for the purpose of this article, I’ll stick to the first 2), you set up your (client-side) tag management system (i.e. Google Tag Manager), add a bunch of conversion tags to your website (or app), collect consent to use the necessary cookies of the advertisement partners you work with and whenever a conversion on your site happens, trigger the tag notifying the advertisement partner of the conversion, sending along some information from those cookies so the advertisement partner can identify which activity on their platform is connected to the conversion.

This setup (maybe with a few developments here and there) is very common and will remain the usual approach for quite some time I presume. However, there is an alternative approach to sending conversions to your advertisement partners. This approach evolved as “offline conversions” - some conversions simply do not happen on your site directly or tracking them is complex. In such cases both Google Ads and Meta allow for delayed notification of conversions, as long as you provide some kind of identification to them, so they know what activity a conversion should be attributed to.

What if you use this alternate approach to simply send all the relevant conversions? Or, if you prefer, use it to send over conversions your (client-side) tracking did not already catch?

Client-side tracking is imperfect. Current estimates talk about 30% of traffic on your site not being trackable (because of ITP, iOS14+, …). In a country like Switzerland, where Apple products are widely used, and considering people who simply won’t consent to cookies even if you kindly ask them, this can climb to 40% (based on my personal estimate). But this is not the only way of tracking traffic on your site…

Server-side tracking

Now, I am not talking about server-side tag managers here (even though they are useful). They still require the user’s browser to send information to the tag manager, so there is still some client-side component (with all its limitations) involved. I am instead talking about your webserver’s logs.

When someone opens your website, their browser requests a file (a .html or the like) from the server. And usually the server logs those requests (or at least you can make it to). Some website hosts call those “access logs”, some others “http logs”, but that’s the same thing…

Those server-side logs contain some rudimentary information on the device sending a request to the server, mostly the IP address and user agent of the browser. They also contain a timestamp, obviously. And they contain the full URI used in the request.

If we consider (successful) requests to a webserver’s .html files “pageviews”, we can use those logs to model sessions - just like the client-side tracking would do, too. OK, there are no events (clicks etc.) other than downloads, as those do not trigger a request to the server immediately. Identifying users (even if they log in) might also be rather difficult (unless a login also triggers a request to your webserver 😜), but pageviews are already very useful.

Now, after loading those logs into your favorite data warehouse (DWH), you probably want to filter some irrelevant traffic first (or do this while you model the data, cf. below): client-side tracking is fairly ok at detecting and excluding bots, the access logs don’t natively filter anything. Good bots at some point would send a request to your robots.txt and therefore their IP/agent can immediately be identified as a bot. For other traffic you can set up a pipeline to determine if an IP/agent belongs to a known bot (I can recommend IPQS for this, but there are plenty alternatives… please consider the “note on consent” below!) and/or add some anomaly detection (a session requesting more than 3 pages within 2 seconds is unlikely relevant to you).

Finally, a little data modeling1 to parse the logs and construct sessions from it, is not only useful for the conversion-topic, but you can also use it to have a baseline of how much traffic your site actually receives. Again, those logs are not restricted by any of the client-side limitations, so at least you get an idea on how many sessions/devices you had on your site.

Conversions in access logs

Server-side logs are useful in many ways. And one of them are conversions: If you can define a conversion as a pageview (rather than a click on a button), it will show up in the access logs. This could be achieved by simply using a thank-you-page after a checkout.

If the URI of this page contains (e.g. as query parameters) the information you need to enrich a conversion (particularly its value) or at least contains a reference to a transaction (like an ID) so you can look up this transaction in your DWH (where the logs are modeled anyway), all you need is the identifier the advertisement partner requires to attribute this conversion to.

After having modeled sessions in your DWH2, you can obviously identify the first pageview of a session and it’s URI. And for most advertisement partners (like Google Ads or Meta), this first URI will contain a “Click ID” in a query parameter - for Google this would be in the parameter gclid, for Meta it is fbclid etc.3 A regular expression can extract this ID and you are ready to send a conversion to your advertisement partner.

Also, you don’t have to replace your current client-side tracking setup. If you have the client-side tracking data in the same DWH, you can simply look up which Click IDs are already caught (and presumably sent to the advertisement partner) by the client-side tracking and only continue processing those Click IDs and conversions not already covered.

Sending conversions to Google Ads

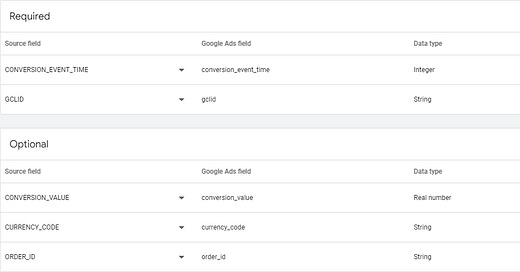

Google Ads has a “Data Manager” which can directly connect to your DWH and load import conversions from it. It has some flaws as Lukas Oldenburg recently summarized, but for me it works fine. Basically, you set up a connection in “Data Manager” importing data from a table/view. This view should contain the gclid, the conversion’s timestamp and optionally a value:

As I am not sure if Google Ads deduplicates conversions, I made sure in the SQL view it only exports conversions it didn’t export previously. Also, apparently you only have 14 days to import a conversion to Google Ads after the initial click happened, so the event’s timestamp and the time the Click ID was observed should not be too far apart. But if you import and model the access logs (and your client-side tracking data) at least once per week, this should be fine:

select

se.clid_value as gclid

, date_part(epoch_second, pv.timestamp) as conversion_event_time

, concat('https://www.', pv.page_url_window_hostname, pv.page_url_window_path) as event_source_url

, tr.amount as conversion_value

, 'CHF' as currency_code

, tr.transaction_identifier as order_id

from t_accesslog_pageview pv

join t_accesslog_session se

on se.session = pv.session

and se.clid_type = 'g' -- only sessions with a gclid are relevant

join [your table of transactions] tr

on tr.transaction_identifier = regexp_substr(pv.page_url_window_querystring, '^.*transaction_id=(.*)(&.*)?$', 1, 1, 'ie', 1)

where pv.page_url_window_hostname = 'your-website.com' -- the conversion of interest

and regexp_like(pv.page_url_window_querystring, '^.*transaction_id=(.*)(&.*)?$', 'i') = true -- the conversion of interest

and pv.timestamp > dateadd(day, -7, current_timestamp()) -- if connector in google ads is scheduled to run weekly

;Sending conversions to Meta

The approach with meta is fairly similar, but there is no “Data Manager”. Instead, Meta has its Conversion API. Once again, you need a table/view in your DWH, but instead of Sessions starting with a gclid, we look for sessions with a fbclid. Well, not quite - the value of fclid is a part of the fbc parameter the conversions API expects. Also, with Meta you only have 7 days (instead of 14):

select

concat('fb.1.', date_part(epoch_millisecond, pv.timestamp), '.', se.clid_value) as fbc

, date_part(epoch_second, pv.timestamp) as event_time

, concat('https://www.', pv.page_url_window_hostname, pv.page_url_window_path) as event_source_url

, tr.amount as value

from t_accesslog_pageview pv

join t_accesslog_session se

on se.session = pv.session

and se.clid_type = 'fb' -- only sessions with a fbclid are relevant

join [your table of transactions] tr

on tr.transaction_identifier = regexp_substr(pv.page_url_window_querystring, '^.*transaction_id=(.*)(&.*)?$', 1, 1, 'ie', 1)

where pv.page_url_window_hostname = 'your-website.com' -- the conversion of interest

and regexp_like(pv.page_url_window_querystring, '^.*transaction_id=(.*)(&.*)?$', 'i') = true -- the conversion of interest

and pv.timestamp > dateadd(day, -7, current_timestamp()) -- older events can't be sent to meta anyway

;Meta will then complain about the event quality as it doesn’t contain IP or user agent (which you could easily add if you wanted) or any other details about the user. But it still works fine just with the fbclid value, you’ll just have to explain this to your social media marketing manager 😎

As there is no Data Manager in place, you’ll have to set up a pipeline exporting the data of this view and sending it to the Conversions API. You can of course use a Reverse-ETL-tool (like Census or Hightouch), but I stick to the tool I use for virtually all my pipelines - good old Azure Data Factory4:

A note on consent

Since those access logs are collected by the server, the user never had a chance to tell you whether they approve of you using their PII (particularly their IP address) for further processing or sending any conversion to Google Ads or Meta. If you happen to work under a strict data privacy regime, you can't use PII without consent.

Now, obviously I am not a lawyer - so do not trust me on this!

The (current) Swiss data privacy laws (which is the regime I happen to work in) allow for the processing of PII as long as the user is informed about the use and is provided with an (easy) option to opt out or get deleted. Other laws (like GDPR) might require consent or PII to be irreversibly anonymized before processing. Since we're not trying to send the user's IP back to Google Ads or Meta, that is not an issue here. But we certainly use the IP in the model preparing the data. Here, only a truncated or hashed (SHA-256 or stronger) IP should be used.

Let me be blunt: this is definitely the hardest part! If you are interested, please contact me - I’m happy to share and discuss my SQL model

This approach assumes a conversion to happen within the session the Click ID was observed in. A device switch or simply a (self-modeled) timeout of the session will break this as there are no cookies or user-identifiers available. But hey, last-click attribution is better than nothing, right?

It’s just another API - no need to worry 😉 Just convert the view’s data to an array:

select

array_agg(object_construct(

'event_name', 'your event name'

, 'event_time', event_time

, 'action_source', 'website'

, 'event_source_url', event_source_url

, 'user_data', object_construct(

'fbc', fbc

)

, 'custom_data', object_construct(

'value', value

, 'currency', 'CHF'

)

)) as data

from your_namespace.your_schema.your_view