In my previous post I mentioned a data model based on logs of requests sent to a webserver:

[…]

When someone opens your website, their browser requests a file (a .html or the like) from the server. And usually the server logs those requests (or at least you can make it to). Some website hosts call those “access logs”, some others “http logs”, but that’s the same thing…

Those server-side logs contain some rudimentary information on the device sending a request to the server, mostly the IP address and user agent of the browser. They also contain a timestamp, obviously. And they contain the full URI used in the request.

If we consider (successful) requests to a webserver’s .html files “pageviews”, we can use those logs to model sessions - just like the client-side tracking would do, too. OK, there are no events (clicks etc.) other than downloads, as those do not trigger a request to the server immediately. Identifying users (even if they log in) might also be rather difficult (unless a login also triggers a request to your webserver 😜), but pageviews are already very useful.

[…]

Regardless of those caveats, I believe those logs can be rather useful - either to…

develop an understanding of the true dimension of traffic on a site without any filters (ITP, iOS14+, …) or

tracking conversions not tracked by client-side tracking (that’s what the mentioned post was about) or

tracking events otherwise not trackable.

The first two are obvious (I presume), let me quickly explain what I mean by the third: Say you have some file users can download from your site. You add a download button/link and can track the click on it via client-side tracking, cool. But what if the file is something you want users to download to their phone, like a .vcf (virtual contact file)? You might then add a QR code to the page for people to scan with their phone. Even if that QR code is just a link to the same page, where the user then has to click the download button/link, you create a new session on a different device - probably you could somehow stitch those sessions together. If that QR code links to the .vcf directly, there is not even a (trackable) session, it’s just a download with a different device.

The access logs catch that download in any case, even if there isn’t a page opened by scanning the QR-code. You still have to do some stitching to find out which session this download belongs to (as the device is different, the IP and user agent are likely different, too), but unless you have tons of pageviews on the page displaying the QR code in a rather short time, using timestamps this is certainly possible. But that might be a story for another post 😜

Back to the point: A data model on top of access logs1 seems useful - not to replace client-side tracking, but to extend it, by using an approach (almost) as old as the internet (in fact, this is how digital analytics started) and capable of answering questions the client-side approach can't address. The presented solution and its results are imperfect, sure, but better than not utilizing them at all if you ask me. So let’s revive and adjust this old solution to modern environments and approach it step by step…

1. Import the logs

Before we can model anything, we need to get the logs into our data warehouse (DWH). How this works really depends on your webserver, the DWH you use and ELT-tools you might be able to leverage. The following steps, even though I try to be agnostic, will illustrate how I do this in Snowflake and most of the websites I want to analyze are hosted by Azure Web Services. That means, I can simply set the logs to be stored in an Azure Storage container and auto ingest (frequently “copy into”) them as strings to Snowflake. Other websites I analyze are hosted on some classic hosting provider, where I can access the logs via SFTP and frequently copy them into Snowflake via Azure Data Factory (or any other ELT tool).

However you get there, as a first step, you will have a raw copy of the logs in your DWH. And here we already have to think about data privacy. Annoying, I know… The logs contain the user’s IP address, which is typically considered PII, depending on the data privacy laws you operate under.

Now, obviously I am not a lawyer - so do not trust me on this!

The (current) Swiss data privacy laws (which I happen to have to abide by) allow for the processing of PII as long as the user is informed about the use and is provided with an (easy) option to opt out or get deleted. Other laws (like GDPR) might require consent or PII to be irreversibly anonymized before processing. Since you can’t really ask for consent with server-side logs, the only option to continue working with the logs (i.m.h.o.) is to obfuscate the IP addresses in the log. And to my understanding, this would have to happen pretty much before anything else - ideally even before duplicating the logs in the DWH, which might be difficult in today’s ELT-world (with the T being behind the L, not the other way around).

If you have to do this and truncated/hashed all the IPs, you can (again: i.m.h.o.) proceed just as if you had plain IPs in your raw dataset. In the end, their actual values (the network blocks) are rather irrelevant here - we just need them as part of the device identification later on. Not having plain IPs limits the capabilities to identify bots or geolocate devices using third party tools, but I guess that’s just something we have to live with.

Just a hint on how I would hash (rather than truncate) the IPs: RegEx-search for patterns of IPv4 and IPv6 in the logs, extract them, irreversibly hash2 (SHA-256 or stronger) their values and replace the original values with the now anonymized (hashed) values.

You will then have a raw log table that looks something like this:

You might be lucky, and your logs are delivered in JSON format, which makes the next step a lot easier. But if it contains strings like in the screenshot, you’ll next have to parse those…

2. Parse and structure the logs

The goal of this step is to transform the raw logs to a structured table. In case your logs are already in JSON format, you might skip this step (as most DWHs today can handle JSON data very well). But if it’s strings of concatenated pieces of information, you’ll certainly want to parse those.

Some DWHs offer a very neat little function to parse logs called GROK() - cf. here and here for more information. However, Snowflake doesn’t.3 Hence, I (for now) had to build a parser myself:

with cte_replaceDoubleQuotes AS (

select

hostname -- in my case, the hostname is not in the logs, but in the file name during copy into

, replace(log, '\\\\"', '|||') as log -- backslashes need to be escaped if this code runs inside a procedure

from [staged logs table]

)

, cte_substrings_left AS (

select

hostname

, log

, position('[' in log) + 1 as timestamp_start

, position(']' in log) - 1 as timestamp_end

, position('"GET ' in log) + 5 as path_start

, position(' ' in substring(log, position('"GET ' in log) + 5)) + position('"GET ' in log) + 3 as path_end

, position(' ' in substring(log, position(' ' in substring(log, position('"GET ' in log) + 5)) + position('"GET ' in log) + 3)) + position(' ' in substring(log, position('"GET ' in log) + 5)) + position('"GET ' in log) + 3 as protocol_start

, position('"' in substring(log, position(' ' in substring(log, position('"GET ' in log) + 5)) + position('"GET ' in log) + 3)) + position(' ' in substring(log, position('"GET ' in log) + 5)) + position('"GET ' in log) + 1 as protocol_end

, position('"' in substring(log, position(' ' in substring(log, position('"GET ' in log) + 5)) + position('"GET ' in log) + 3)) + position(' ' in substring(log, position('"GET ' in log) + 5)) + position('"get ' in log) + 4 as status_start

from cte_replaceDoubleQuotes

where position('"GET ' in log) > 0

)

, cte_substrings_middle AS (

select

hostname

, log

, timestamp_start

, timestamp_end

, path_start

, path_end

, protocol_start

, protocol_end

, status_start

, position(' ' in substring(log, status_start)) + status_start - 2 as status_end

, position('"' in substring(log, status_start)) + status_start as previouspage_start

, position('"' in substring(log, position('"' in substring(log, status_start)) + status_start)) + position('"' in substring(log, status_start)) + status_start - 2 as previouspage_end

from cte_substrings_left

)

, cte_substrings as (

select

hostname

, log

, timestamp_start

, timestamp_end

, path_start

, path_end

, protocol_start

, protocol_end

, status_start

, status_end

, previouspage_start

, previouspage_end

, position('"' in substring(log, previouspage_end + 2)) + previouspage_end + 2 as useragent_start

, position('"' in substring(log, position('"' in substring(log, previouspage_end + 2)) + previouspage_end + 2)) + position('"' in substring(log, previouspage_end + 2)) + previouspage_end as useragent_end

from cte_substrings_middle

)

, cte_fields as (

select

substring(log, 0, position(' ' in log) - 1) as ip

, try_to_timestamp_ntz(substring(log, timestamp_start, timestamp_end - timestamp_start + 1), 'DD/MON/YYYY:HH24:MI:SS TZHTZM') as timestamp

, concat(hostname, substring(log, path_start, path_end - path_start + 1)) as page_url_window_url

, substring(log, protocol_start, protocol_end - protocol_start + 1) as page_url_window_protocol

, hostname as page_url_window_hostname

, substring(log, path_start, path_end - path_start + 1) as page_url_window_path

, try_to_number(substring(log, status_start, status_end - status_start + 1)) as status

, case

when previouspage_end - previouspage_start = 0 then null

else substring(log, previouspage_start, previouspage_end - previouspage_start + 1) end as previouspage_url_window_url

, substring(log, useragent_start, useragent_end - useragent_start + 1) as useragent

from cte_substrings

)

select

ip

, timestamp

, replace(page_url_window_url, '|||', '\\\\"') as page_url_window_url

, replace(page_url_window_protocol, '|||', '\\\\"') as page_url_window_protocol

, page_url_window_hostname

, replace(page_url_window_path, '|||', '\\\\"') as page_url_window_path

, status

, replace(previouspage_url_window_url, '|||', '\\\\"') as previouspage_url_window_url

, replace(useragent, '|||', '\\\\"') as useragent

from cte_fields

where ip is not null

and timestamp is not nullDepending on the format of your logs, you’ll probably have to adjust this. It took me quite a while to figure it out, so let’s hope GROK() is (soon) available to you 😜 Another function to utilize might be PARSE_URL(), but only if the URI protocol is in a useful format (or replaced by a useful format before parsing).

In case you have to anonymize the IPs, but didn’t already do so during the import of the raw logs, this would be when you latest should do it (and remember to drop the staged table containing the plain IPs immediately after parsing the logs and hashing the IPs).

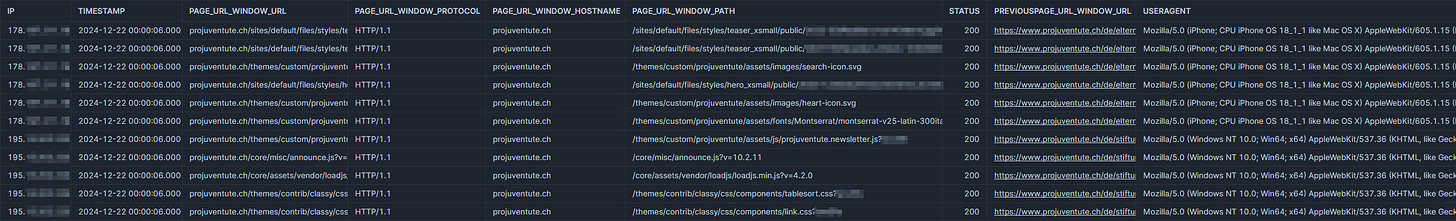

Afterwards, your structured data looks something like this:

Note: my parser didn’t the split URI’s path from query parameters and fragment identifiers.4 Feel free to add this to the parser already, I do this during the next step.

3. Identify devices

Natively server logs don’t contain a device- (or user-) ID you could use to attribute several requests to the same session (or device or user) - we’ll have to create one. The easiest way to do so is to simply concatenate the (hashed) IP and user agent:

hash(concat(ip, '_', useragent)) as user_fingerprintI call the result a “fingerprint”, but it has nothing to do with actual fingerprinting. It’s just the closest I get to identifying a user - even though I’m aware I’m rather far away from that 😅

This ID is suboptimal for multiple reasons:

IP addresses change, user agents can be manipulated

IP addresses can be shared (think of a classroom of students all visiting your site during class - they very well could all be on the same IP using the same browser)

people use multiple browsers and devices

Could this “fingerprint” be improved? If for instance you could make your webserver add some kind of session ID to each logged request or at least to each URI in the browser, so it shows up in the logs as a query parameter, this might be worth looking into. However, we’re not trying to replace client-side tracking and analytics. We mostly want a general picture of our traffic without client-side filters and collect some conversions we didn’t already catch via client-side tracking (where we have click IDs to identify a session fairly reliably). Hence, I didn’t spend any time going deeper down this rabbit hole - please let me know if you do!

4. Identify pageviews

Now we narrow down the logs to only those requests relevant to our task. We are interested in pageviews, so only requests for either .html files or for URIs without any file extension are kept:

case

when regexp_substr(page_url_window_path, '\\.[a-z0-9]{2,11}$', 1, 1, 'i') is null then '.html'

when regexp_substr(page_url_window_path, '\\.[a-z0-9]{2,11}$', 1, 1, 'i') = '.htm' then '.html'

else regexp_substr(page_url_window_path, '\\.[a-z0-9]{2,11}$', 1, 1, 'i') end as filetypeBasically: Everything shall be treated as a request for a .html if there is no other file extension present. Then, in the next step, we can use this new filetype field to exclude every request not for a .html file (or, depending on your website, you might keep other filetypes representing pages, too).

5. Identify starts of new sessions

This is very much the crux of sessionization: just very few decades ago, it was rather difficult to find the first request of a new session in a couple of million records. Today’s DWHs are not as limited - it’s really just SQL with some window functions… Let’s first find the previous pageview to each pageview:

select

ip

, useragent

, user_fingerprint

, timestamp

, page_url_window_url

, page_url_window_protocol

, page_url_window_hostname

, page_url_window_path

, page_url_window_querystring

, status

, previouspage_url_window_url

, lag(timestamp) over (partition by page_url_window_hostname, user_fingerprint order by timestamp asc) as previousevent

, lag(page_url_window_url) over (partition by page_url_window_hostname, user_fingerprint order by timestamp asc) as previousevent_url

from [table with filetypes]

where filetype = '.html' -- here is the filter for filetypes

and status = 200

qualify previousevent_url is null

or (

previousevent_url != page_url_window_url -- if the same page is requested twice, ignore the second request...

or datediff(minute, previousevent, timestamp) > 30 -- ... unless the previous request is too long ago (and the previous session is considered to be expired)

)Additionally, you could force a new session to start if specific query parameters are observed in a pageview - particularly click IDs would be relevant here.

Then we identify the pageviews which didn’t have any previous pageview or their previous pageview was too long ago - those are the session starts. While we’re at it, we also add an ID to those session-starting pageviews. The ID will be used to join sessions and pageviews within sessions later:

select

ip

, useragent

, user_fingerprint

, timestamp

, page_url_window_url

, page_url_window_protocol

, page_url_window_hostname

, page_url_window_path

, page_url_window_querystring

, status

, previouspage_url_window_url

, case

when previousevent is null

then hash(concat(page_url_window_hostname, '_', user_fingerprint, '_', timestamp))

when datediff(minute, previousevent, timestamp) > 30

then hash(concat(page_url_window_hostname, '_', user_fingerprint, '_', timestamp))

else null end as new_session

from [table with previous pageviews]Now we need to tell each pageview which session it belongs to:

select

ip

, useragent

, user_fingerprint

, timestamp

, case

when new_session is null

then lag(new_session) ignore nulls over (partition by page_url_window_hostname, user_fingerprint order by timestamp asc)

else new_session end as session

, page_url_window_url

, page_url_window_protocol

, page_url_window_hostname

, page_url_window_path

, page_url_window_querystring

, status

, previouspage_url_window_url

from [table with session starts]6. Aggregate sessions

We’re almost done! Now we simply aggregate pageviews identified to belong to a session to get some session metrics.

One serious limitation of the server-side approach is: We have no idea how long a user remained active on the last page of a session (as we have neither events nor page pings on the page). I therefore simply assume a fixed number of seconds the user might remain on the last page5 to estimate a session length:

select distinct

session

, ip

, useragent

, user_fingerprint

, page_url_window_hostname

, first_value(timestamp) over (partition by session, page_url_window_hostname order by timestamp asc) as session_start

, dateadd(second, 15, last_value(timestamp) over (partition by session, page_url_window_hostname order by timestamp asc)) as session_end

, first_value(page_url_window_url) over (partition by session, page_url_window_hostname order by timestamp asc) as firstpage_url_window_url -- min_by() might e more efficient here

, last_value(page_url_window_url) over (partition by session, page_url_window_hostname order by timestamp asc) as lastpage_url_window_url -- max_by() might be more efficient here

, datediff(

second

, first_value(timestamp) over (partition by session, page_url_window_hostname order by timestamp asc)

, dateadd(second, 15, last_value(timestamp) over (partition by session, page_url_window_hostname order by timestamp asc))

) as session_seconds

from [table with prepared pageviews]Could this be done by grouping sessions instead? Sure, go ahead!

Bonus 1: Using the “fingerprints”, you can also add the time since the previous session of the same “fingerprint” if that is relevant to you.

Bonus 2: If you apply the same methodology to add "fingerprints” to client-side tracked sessions and by using timestamps, you can map client-side and server-side sessions to each other.6 This is particularly useful if you want to identify only those sessions not already tracked client-side (and therefore probably already were sent to advertisement partners).

7. Filter / flag unwanted pageviews and sessions

One of the key benefits of server-side tracking is also a key obstacle at the same time: Nothing is filtered here. If you want to exclude traffic from employees and/or bots, this has to be added as a final step.

Exclude employee traffic

To exclude employees, you first have to identify them. There are a couple of approaches I can think of:

You might kindly ask your employees to always add a query parameter to the first page when they visit your site. Then you can search for sessions with such query parameters. Will this work with the average employee? Probably not 🙄

You could change the user agent of every browser used in your work environment to contain a specific string to look for. Filtering by this could also be applied to client-side tracking. However, you probably also want to catch employees using their private devices when visiting your site and on those this method would fail.

Lastly, you can identify IP addresses used by employees (i.e. using their logins to your IAM). Since this method can also be retroactively applied to (both client- and server-side) tracking data even if an employee used a new IP to visit your site, this is my preferred option. However, keep in mind even an employee’s IP is considered to be PII, so make sure you are allowed to use it (again: maybe in a hashed form) in this way!

Exclude bot traffic

Identifying bots is in fact my favorite part of it all. There are multiple approaches to catch them - and they can (and should) be combined to catch as many bots as possible:

“Good” bots will uncover themselves by looking for the robots.txt on your webserver. Luckily we just imported request logs from our webserver, so finding IPs and user agents looking for this particular file is rather easy.7

Other bots might still identify as bots by using certain user agents. I use the same pattern as the TS/JS isbot() function to do this.8

Using third party tools to identify IPs used by bots requires plain IPs (hence: you might need consent to process IPs). But if your local jurisdiction allows for this, I can recommend IPQS.9

You can define some rules to flag suspicious traffic, e.g. sessions with more than 2 pageviews but an average pageview length of less than 2 seconds.

Finally, you can use defined metrics (pages per session, pageview length, session length, etc.) to detect outlier sessions (isolation forests are pretty efficient at this).

In the end, you should have a pretty good picture on non-employee and non-bot traffic on your site. And with that being said, we’re done 🥳

Sounds familiar? You've been in digital analytics for quite free time, hum? 😉

OK, nothing is really irreversible… But who’s going to brute-force-decipher those IPs?

Yet - the idea to add GROK() is here if you’d like to upvote it: snowflake.discourse.group

Using PARSE_URL() would definitely make this easier 😏

Based on actually observed client-side pageview durations, this number doesn’t have to be completely arbitrary

If you are interested in my approach on this, please reach out!

Some bots (like Montastic) might use a different user agent to look for the robots.txt than for scanning the actual website - keep that in mind and use (hashed) IPs preferably

Watch this repo and get notified when they release a new version of patterns 😜